END eSAFETY: ABOLISH THE ONLINE SAFETY ACT

THE eSAFETY PROBLEM

The eSafety Commisioner holds office under the Online Safety Act 2021. This world-first attempt at regulating the internet has instead been a disaster for Australians and our international reputation.

Whilst the Online Safety Act 2021 was supposed to have safeguards protecting political expression, the Commissioner has been able to entirely circumvent them. Her office has acted in a partisan manner pursuing political vendettas. It is time to put an end to this failed experiment.

END THE ONLINE SAFETY ACT TODAY

The Online Safety Act is the primary legislation that governs the 'eSafety Commissioner'. It provides a range of powers that allow for content to be ordered to be removed from the internet.

In short, it has been misused. Rather than protecting the public, the eSafety Commissioner has engaged in removing material for political purposes. People who have been genuinely subject to abuse have not been protected, whilst her office has waged political crusades. The result has been to undermine Australian democracy and damage Australia's international reputation.

Unfortunately, with these types of powers, the safeguards are easily avoided. The Commissioner fails to give notice to all the affected people, thus limiting challenges. Her office also simply ignores many of the constraints in the legislation, including the requirement that she is not entitled to intervene with the implied freedom of political communication.

The Free Speech Union of Australia. We exist to protect and promote Freedom of Expression for all Australians. To find out more, follow our Twitter feed, or visit our website, where you can join us.

We will deliver a list of names and postcodes to Parliament in due course. Your email address information will only be known to the Free Speech Union of Australia.

The claims that the eSafety Commissioner is effective at keeping people safe on the internet is a myth. They have issued very few formal notices. For children, she issued a grand total of 13 notices in the space of a year, which were letters to School Principal's telling them to stop particular children bullying others.

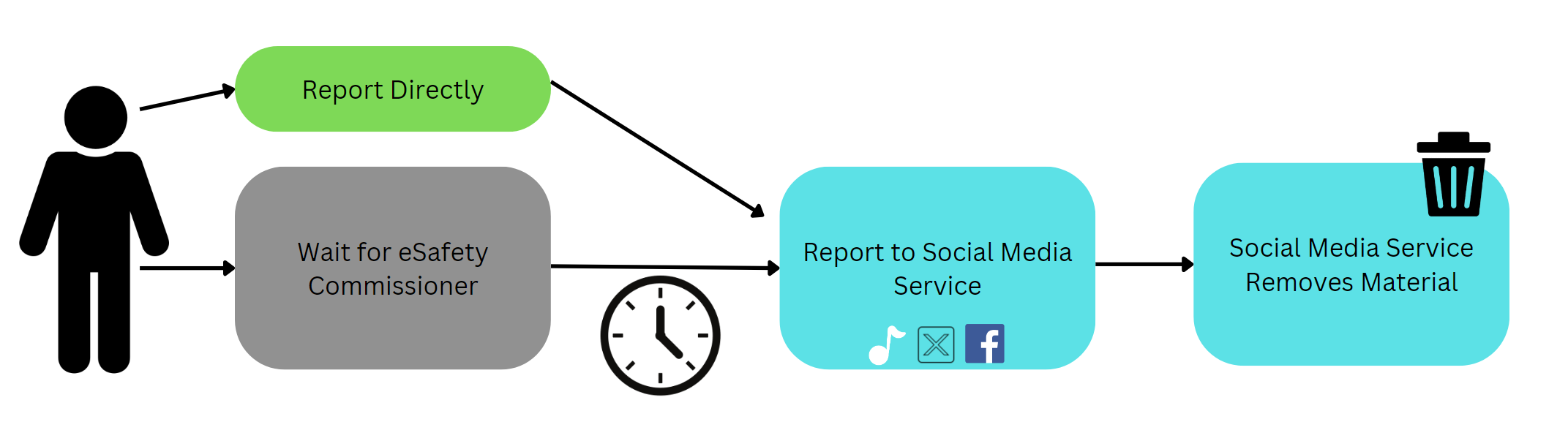

The 'informal' investigations are also ineffective. It is easier to report directly to platforms, rather than contacting the eSafety Commissioner. Here's an illustration.

It depends what you consider to be an 'investigation'. If reading a report saying a post is 'bad' and taking a few minutes to decide if that complaint is justified counts as an investigation, then the Commissioner is right.

The truth is that this activity is done millions of times a day by each social media provider, when they moderate posts, one can see the scale in the European Union's own database. On that basis, we would say the the eSafety Commissioner is considerably exaggerating what they do.

Her approach is authoritarian. You don't need to take our word for it. The Electronic Frontier Foundation has said that the injunction she sought "set[s] a dangerous precedent that could legitimise practices of authoritarian governments, which do not fully value the rights to freedom of speech and access to information."

They also noted that her approach risks "a fragmented and splintered internet, undermining freedom of expression and access to information worldwide".

In the XCorp proceedings, she has also been alleged to exceed her jurisdiction, in other words, purport to exercise legal powers she does not have. We are also aware of her failing to give end users notice of their appeal rights (thus making it impossible for them to challenge). This makes her conduct even more worrying.

There are many answers. It starts with parental responsibility and controlling the use of devices. For example, it is possible for parents to take steps to keep children away from social media, or limiting access at the device level.

The idea that an eSafety Commissioner will help by taking down content is untrue. In respect of genuine abuse, existing platforms have very powerful incentives. Advertisers do not wish to see their content next to porn, or genuine cyber-abuse. Unfortunately, the eSafety Commissioner spends their days targeting other material, including lawful political speech.

If there is a need for the issue of take down notices, then this should be done judicially, following a professional process. For example, New Zealand's process has the safeguard of requiring this to be done with a judicial officer involved, as well as a technology expert to advise on appropriate action. Australia has one unaccountable person attempting to do this, namely the eSafety Commissioner.

The eSafety Commissioner obtained an temporary injunction seeking to enforce its original notice to take down a video of Mar Mari Emmanuel. X have challenged this injunction, as well as the underlying notice.

The legal arguments primarily concern:

- What content the eSafety Commissioner is entitled to ban as likely being 'Refused Classification' content.

- What steps are 'reasonable' for X to take the material unavailable to Australians.

- Whether there was a valid notice to begin with.

They are under different sections of the Act. In X's case, the Commissioner claimed it was due to the video likely being 'RC' (or Refused Classification) rated content, and thus should be taken down. The main error is that it is unlikely to meet that standard.

In Billboard Chris's case, the claim is that it was 'adult cyber abuse', namely content targeted at an individual (Teddy Cook), with a view towards causing him serious psychological harm. The main legal error is that the removal notice was aimed at material that does not meet the statutory definition of adult cyber abuse. It was not targeted at an Australian adult and was a relatively mild political statement. There was nothing obscene or violent about it.

As it does not meet the statutory definition, the Commissioner simply had no jurisdiction to issue the notice.

They reflect the same conduct of the eSafety Commissioner in exceeding her jurisdiction and misunderstanding her powers. They also both illustrate the risks of setting up an eSafety Commissioner and giving one person the purported power to police the internet.